- #Beautiful soup github webscraper how to#

- #Beautiful soup github webscraper install#

- #Beautiful soup github webscraper license#

- #Beautiful soup github webscraper download#

"soup = BeautifulSoup(html_data, \"html5lib \") " "Parse the html data using `beautiful_soup`. Save the text of the response as a variable named `html_data`.\n"

#Beautiful soup github webscraper download#

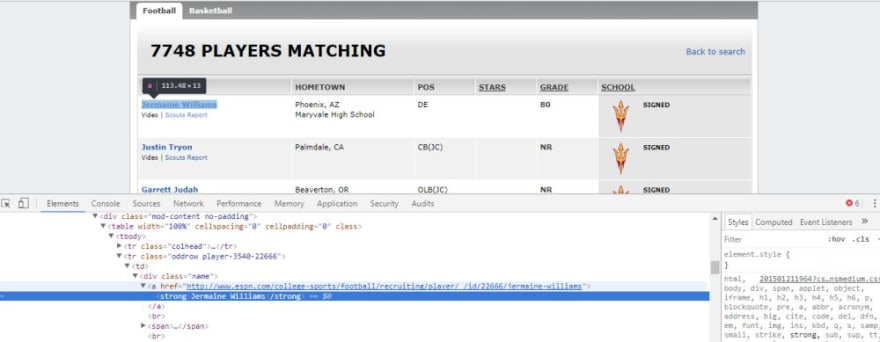

"Use the `requests` library to download the webpage (). "# Using Webscraping to Extract Stock Data \n " "Successfully installed beautifulsoup4-4.9.3 bs4-0.0.1 soupsieve-2.2.1 \n " "Installing collected packages: soupsieve, beautifulsoup4, bs4 \n ", "Building wheels for collected packages: bs4 \n ", "Collecting beautifulsoup4 (from bs4) \n ", " Extracting Data and Building DataFrame \n ", " Parsing Webpage HTML Using BeautifulSoup \n ", " Downloading the Webpage Using Requests Library \n ", " Using beautiful soup we will extract historical share data from a web-page. "Not all stock data is available via API in this assignment you will use web-scraping to obtain financial data.

#Beautiful soup github webscraper license#

This project is licensed under the MIT License - see the LICENSE."Extracting Stock Data Using a Web Scraping \n "

#Beautiful soup github webscraper how to#

Please shoot me an email at with the title 'Contributing to AmazonScrapper' if you have suggestions on how to make it better or would want to know more about pull requests.

#Beautiful soup github webscraper install#

Regex was experimented with but not used in this version. There can be better validation for text-parsing.Make this project more dynamic and deploy it in a presentable manner.Additionally, investors can use this to monitor an entire commodity space. Thus, I decided to make a minimal but effective version which doesn't need any API requests.Īs the images below will indicate, this can also be invaluable to small and new business in trying to gauge competition. This can be a problem, as I found out when tracking kitchen-ware over a month to visualize fluctuations. However, services usually rate-limit your requests or make you pay a decent fee. API requests on those ASIN codes can give a detailed analysis of the inventory. The initial inspiration for this project was to get a dump of Amazon ASIN codes, or ID's for any product category. The user can enter any product category and a 'csv' file with the Amazon ASIN codes, product name, price, and number of reviews is generated. This project performs web-scraping on with the Python package Beautiful Soup. NOTE: This repo is no longer maintained due to constant CSS changes on amazon's website.

0 kommentar(er)

0 kommentar(er)